Docker Swarm in GCP

August 25, 2022

This represents how the process I learned about Docker Swarm.

Background

Container Orchestration automates most of the operational effort required to run workloads and services in containers. These operational efforts include deployment, management, scaling, networking, load balancing and more. Current software that provides this solution include Kubernetes, Docker Swarm, Hashicorp Nomad and others.

I have understood and used kubernetes to production usage, this blog post represents my own learning in trying Docker Swarm.

Why use Container Orchestration?

Simply put, we can easily manage 3 containers. However, imagine if there are tens to hundreds of containers, it will be easier to run in distributed mode in this case Container Orchestration. In addition, there will be many features that can be implemented to ensure they are running, healthy and more.

Practice Cases

Let's deploy a scenario to put things in practice. This scenario consists of several simple HTTP services running on Docker Swarm, in this case on Google Cloud Platform (GCP).

Prerequisites

- Docker (here to install on ubuntu)

- GCP Project

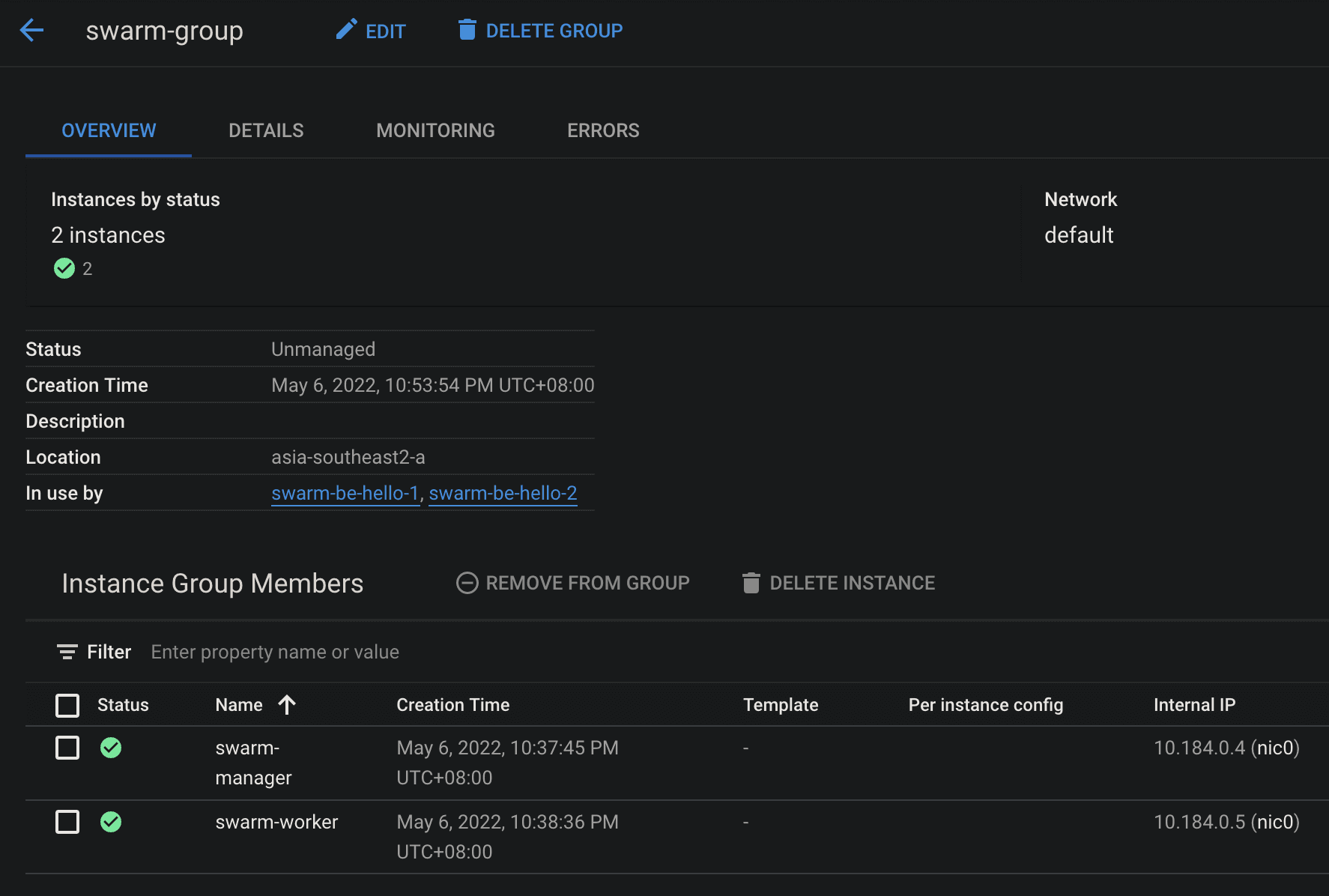

- 2 compute engines with installed docker

Architectural Overview

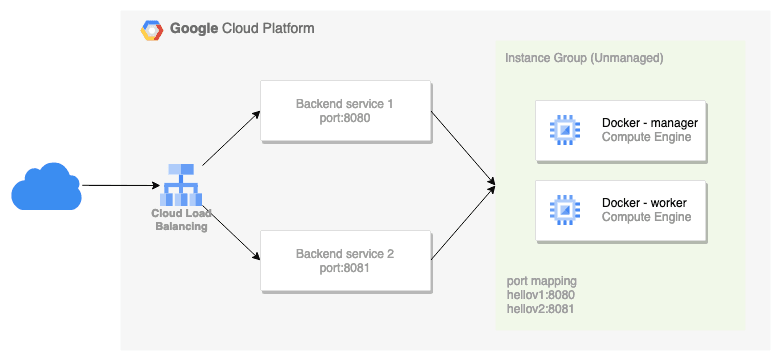

We will try to expose a simple HTTP service so that it can be accessed by the internet. Docker host is used as a member of the unmanaged instance group and this instance group is the backend of the load balancer backend service.

In the instance group, 2 ports are addressed for each service. Load balancing will divide requests based on URL map routes to backend services.

Docker Swarm

Open up SSH sessions on each of the nodes.

Initialize the swarm on host manager:

manager:~$ sudo docker swarm init

# output

Swarm initialized: current node (yfswsafnaarbf1c29n1uknx5b) is now a manager.

To add a worker to this swarm, run the following command:

docker swarm join --token <TOKEN> 10.184.0.4:2377

To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.

The output shows how to add a worker to the swarm using the token, IP and port (2377) to manage the cluster. If needed add a firewall to allow port 2377 on the node.

Copy and run the command on the worker host:

worker:~$ sudo docker swarm join --token <TOKEN> 10.184.0.4:2377

# output

This node joined a swarm as a worker.

To view cluster nodes:

sudo docker node ls

to add another node/host as manager or worker, it's easy just to know IP and token, to display it run command

sudo docker swarm join-token managerfor manager orsudo docker swarm join-token workerfor worker.

Deploy Service

In the host manager, deploy 2 versions of the service app listening on ports 8080 and 8081 respectively:

sudo docker service create --name my-web --replicas 2 -p 8080:8080 gcr.io/google-samples/hello-app:1.0

sudo docker service create --name my-web-v2 --replicas 3 -p 8081:8080 gcr.io/google-samples/hello-app:2.0

Service app version 1 with 2 replicas while version 2 with 3 replicas. We can see that total 5 container have been distributed by running command sudo docker ps.

To test access to the service app:

watch -tn1 curl -s localhost:8080

# output

Hello, worlds!

Version: 1.0.0

Hostname: 1209918cadc0 # might change different hostname

watch -tn1 curl -s localhost:8081

# output

Hello, worlds!

Version: 2.0.0

Hostname: 955c20a6e33a # might change different hostname

Instance Group

Docker host as a member of the unmanaged instance group and this instance group as the backend of the load balancer backend service. In the instance group, 2 ports are addressed for each service. Load balancing will divide requests based on URL map routes to backend services.

Expose Public

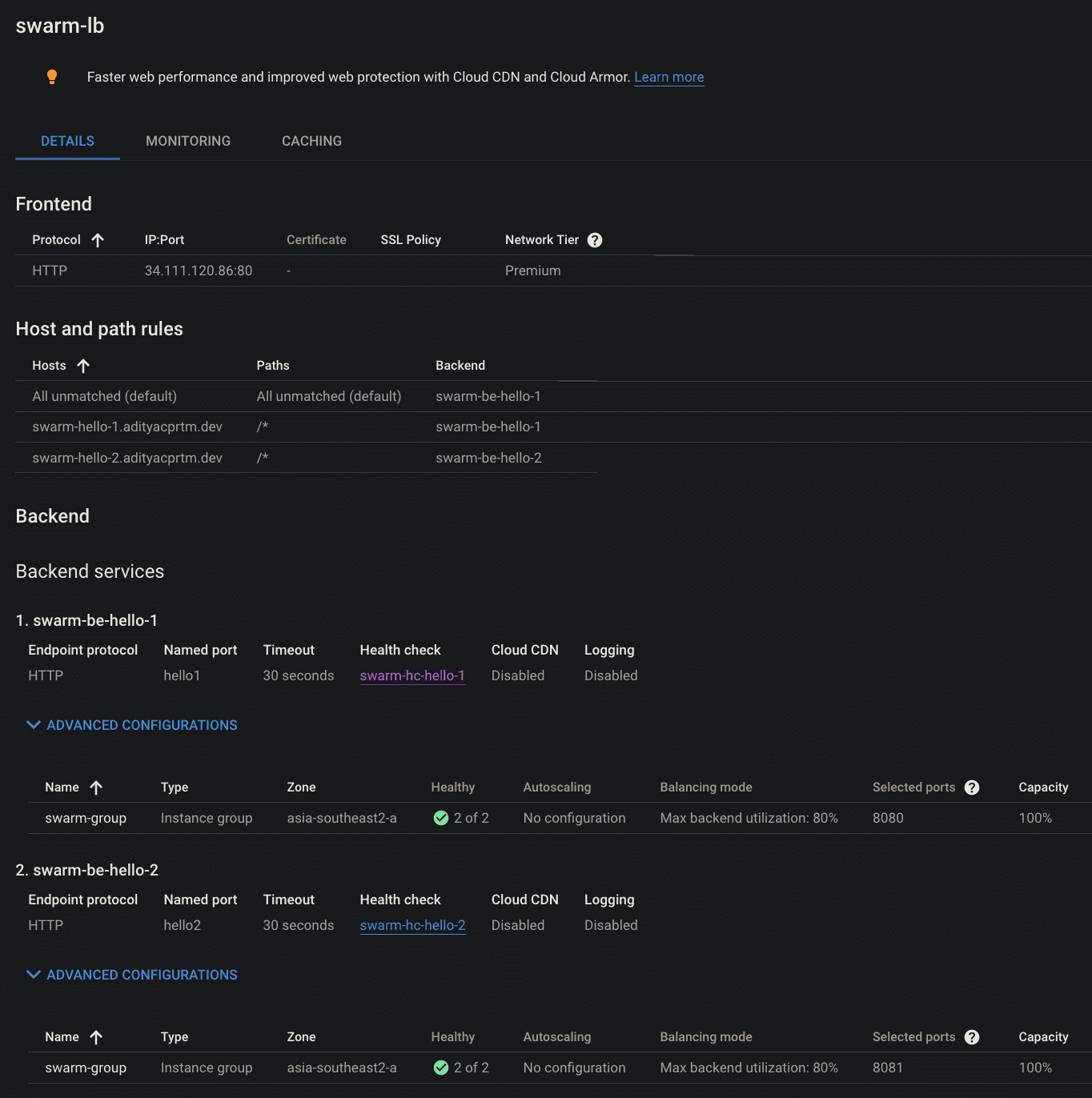

Before pointing load balancing to the instance group, make sure ports 8080 and 8081 are allowed by the firewall node.

Create an HTTP load balancer by configuring the backend service to the instance group.

IMHO, the health check load balancer mechanism is not optimal in this deployment. Pointing an HTTP check to the service app will perform a double check and not represent the health of the instance. I can suggest deploying a simple daemon on each node to check instance health.

Finally, configure the DNS Server to point the domain to the Load balancer IP. We can do the test from the client VM or local:

client:~$ watch -tn1 curl -s https://swarm-hello-1.adityacprtm.dev

# output

Hello, worlds!

Version: 1.0.0

Hostname: 1209918cadc0 # might change different hostname

client:~$ watch -tn1 curl -s https://swarm-hello-2.adityacprtm.dev

# output

Hello, worlds!

Version: 2.0.0

Hostname: 955c20a6e33a # might change different hostname

That's it!